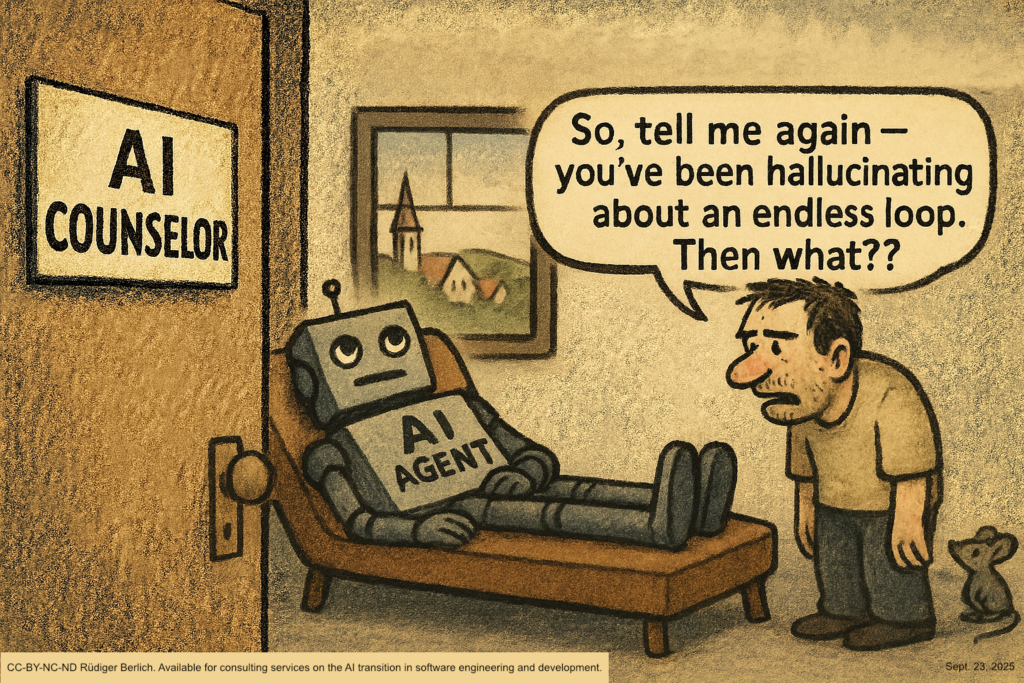

No matter what you hear, hallucinations are a real problem when dealing with AIs. I suspect (though cannot prove) that the varying availability of compute power for individual requests also plays a role — for example, you might get better answers during off-peak times. In my experience, however, when working with AI agents in software development, this issue is less pronounced than in domains without inherent constraints. After all, code fragments must fit together, so many errors become apparent immediately. Agents will usually discover their mistakes when attempting to compile the code. Particularly when coordinating parallel swarms of AI agents (see, for example, Claude Flow), the AIs essentially provide their own checks and balances. Embedding development into a test-driven workflow adds further control. Still, human verification and validation remain indispensable.

Have fun,

Rüdiger